Get 100 lighthouse performance score with a React app

- Written by

- Name

- Mladen

- Title

- Software Engineer

- Published on

In this article, I will lead you through a high-level setup on what is needed to achieve those always-eluding high scores in your lighthouse metrics, even if you use a proper React app, and fetch everything from a CMS!

In subsequent posts, I might dive deeper into the details of each individual component of this setup

What are we looking at?

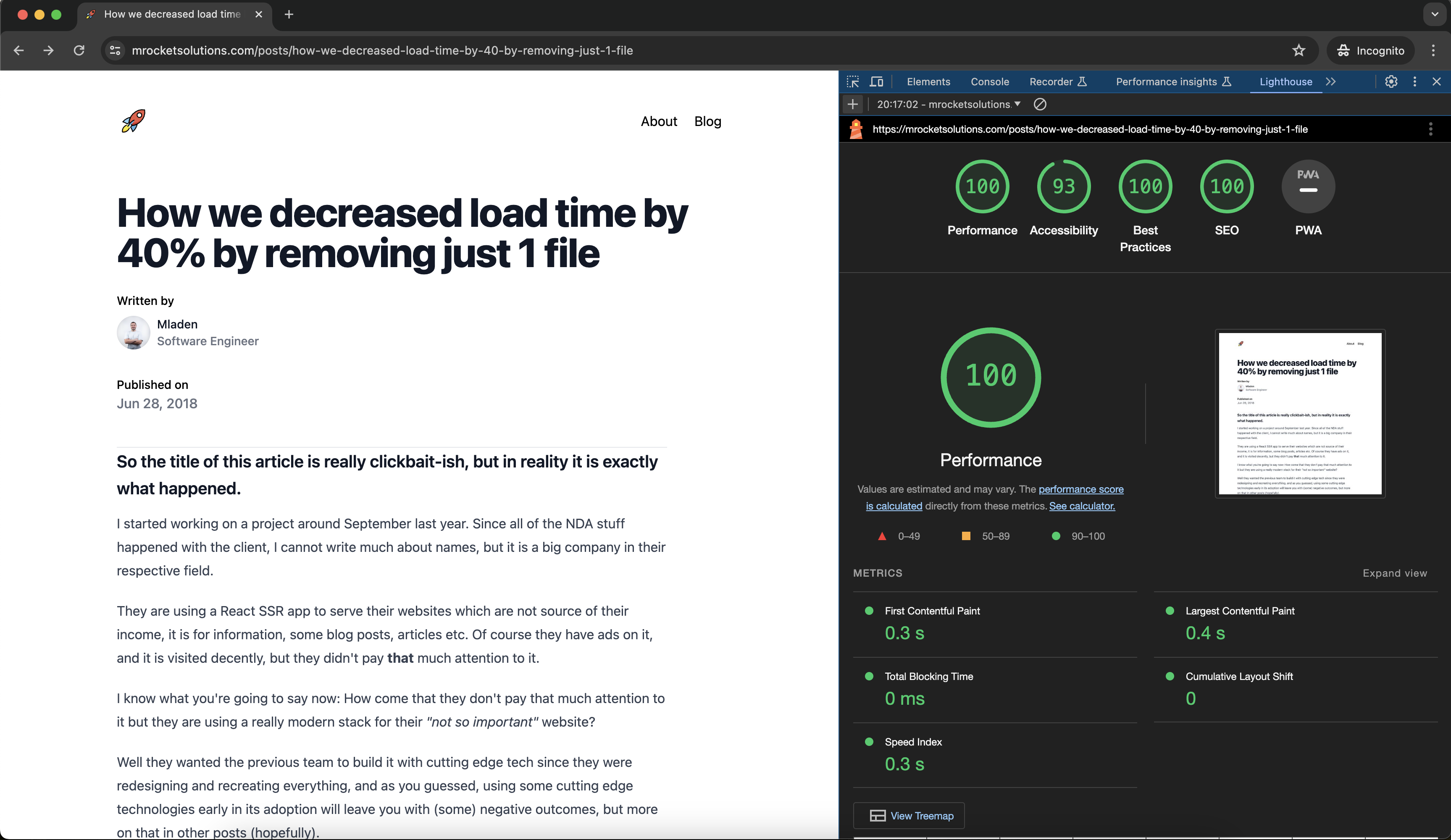

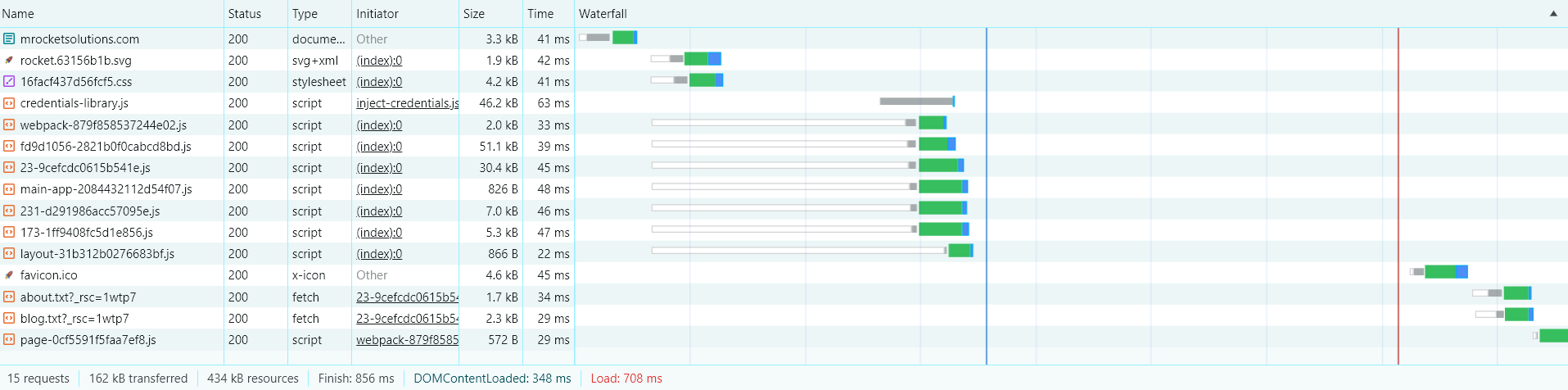

You probably looked at your network tab numerous times debugging some API calls and looking at your requests and responses, but I bet you haven't paid too much attention to the Waterfall column of this table, and that one can paint almost a full picture of a website from a performance perspective, and we'll refer back to it from our sections below.

What can we observe from this waterfall example?

First of all, you can notice that the home page loaded extremely fast, it took just 708ms for it to completely load (but not fully finish - more on that later) and the total downloaded content is just 162kBs, which is insane compared to a whopping 490MBs of just node modules this small project has!

We can group this waterfall into roughly 3 parts:

- HTML Document

- Assets & JS

- Other files that are not needed for the initial render (but important for performance otherwise!)

HTML document pretty much lays down the "plan" and tells our browser that the assets and JS files are needed next and that they should be downloaded in parallel. You can observe this behavior in the waterfall by noticing this group of files that started to download at the same time, and the third group of documents, downloaded after everything has been completed which serve the purpose of "preloading" the pages from the navigation bar and speed up subsequent linking to them.

Let's talk about the 2 things that make this happen: building and serving

Building the app

Even though there is now a variety of frameworks that can do somewhat of a similar thing, I always keep coming back to Next.js as I believe it is the most mature React server-side-rendering option out there. The time this framework has been out really can be seen by the amount of optimization these guys did to bring this framework to the best light possible.

Cool, but the only time I previously managed to get 100 performance score is when I deployed a static html + css website, how the hell did you manage it with Next.js?

- Someone on the internet

Well, we're pretty much doing the same thing :)

If you set in your next.config.js file

const nextConfig = {

output: 'export'

}

module.exports = nextConfigand run

npm run build

it will generate an out folder with static html, css, and js!

Not only that but Next.js magic will split all your code per page, and optimize as much as it can, therefore shrinking your js files to a minimum!

Using the Next router will also enable prefetching of your next pages so when you click on another page, it will appear almost instantly!

First of all, you can notice that the home page loaded extremely fast, it took just 708ms for it to completely load (but not fully finish - more on that later)

If you get back to this paragraph and the waterfall graph, you can notice a couple of files downloaded after the red line which marks the loaded event, and those are metadata for about and blog pages, which are in my navbar!

Next.js loaded everything needed for this page, rendered it fully thus making it blazing fast, and then after that, loaded some metadata for other pages without blocking anything else. Having to set up something like this ~5 years ago required having a team of webpack and JS gurus and ninjas so I'm in awe that we get configurations like this out of the box today.

But wait a minute, I told you about fetching the data from a CMS, how is that being handled?

Using Next.js generateStaticParams function in combination with dynamic route segments will fetch all your CMS content in build time, and generate each blog post page to a static html file, as well as the blog post list, therefore losing another set of API calls from the client!

Here is all the magic in the code snippet from my page @ src/app/posts/[slug]/page.jsx

const AllPosts = `

query AllPosts {

posts(orderBy: publishedAt_DESC) {

id

slug

}

}

`;

async function getPosts() {

const allPosts = await fetch(process.env.HYGRAPH_ENDPOINT, {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({

query: AllPosts

})

}).then((res) => res.json())

return allPosts.data.posts

}

export async function generateStaticParams() {

const posts = await getPosts()

return posts.map((post) => ({

slug: post.slug

}))

}Serving the app

We have our static and optimized files, now we have to serve our app somewhere.

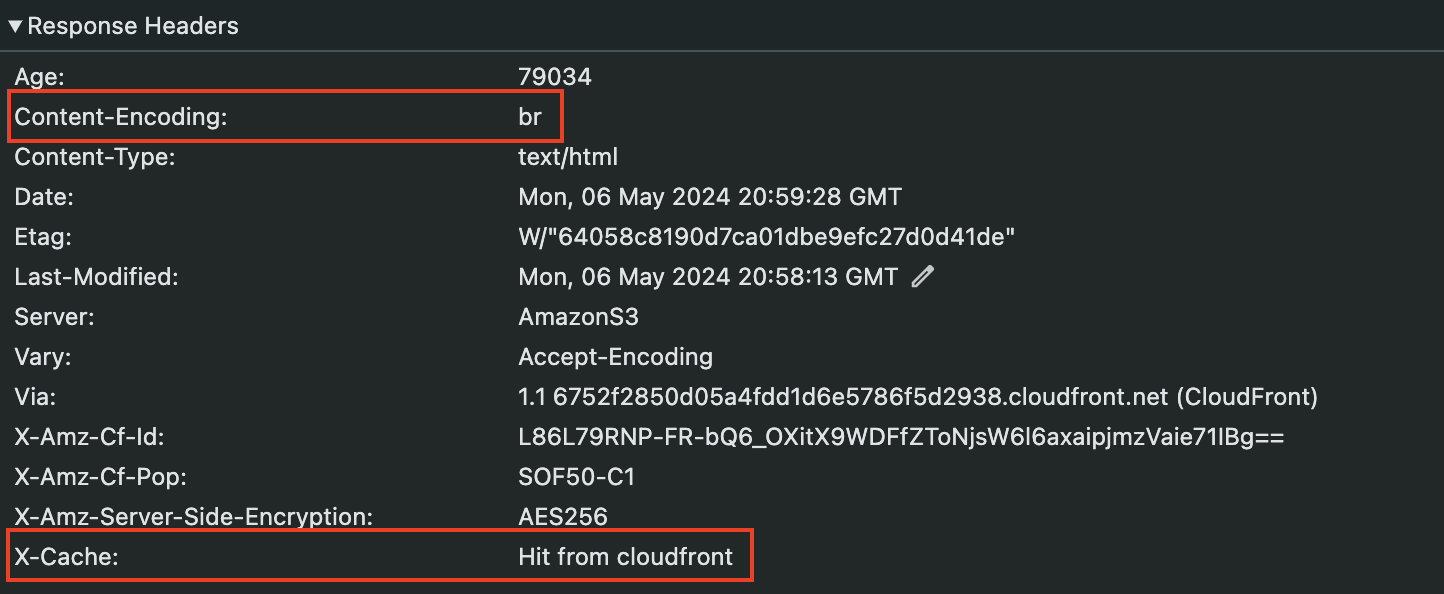

We will store our files on the s3 bucket and place Cloudfront CDN in front of it.

What do we get from that setup?

Looking at one of the files in the network tab you will notice that the file is encoded with br which stands for brotli compression algorithm which has better compression around 15-20% than gzip which was previously the standard.

On top of that Cloudfront is caching our data which you can see by Hit from cloudfront message which means that your static content (which is your whole app at this point) is available physically in a location near you, which will bring latency to a minimum!

Using a CDN with your fully static app makes so much sense, and when you get a good compression algorithm on top of that, plus the build-time optimizations, can't get any better than that!

Bonus - CMS

Bonus points for getting that 100 SEO score go to Hygraph CMS, as it has good SEO support, graphQL API as you saw in the code snippet above so you can generate queries easily, as well as good templates and starters (which I used for my blog).

Feel free to check out the performance mentioned at https://mrocketsolutions.com/blog